| Version 7 (modified by , 7 months ago) ( diff ) |

|---|

MPI C

If you have not done yet, download the Sample files by:

git clone https://hidekiCCS:@bitbucket.org/hidekiCCS/hpc-workshop.git

Hello World

/home/fuji/hpc-workshop/SimpleExample/C/hello_MPI

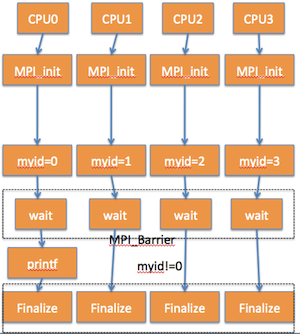

/* hello_mpi.c: display a message on the screen */ #include <stdio.h> #include <mpi.h> int main (int argc, char *argv[]) { int myid,numproc; /* Initialize */ MPI_Init(&argc,&argv); /* get myid and # of processors */ MPI_Comm_size(MPI_COMM_WORLD,&numproc); MPI_Comm_rank(MPI_COMM_WORLD,&myid); printf("hello from %d\n", myid); /* wait until all processors come here */ MPI_Barrier (MPI_COMM_WORLD); if ( myid == 0 ) { /* only id = 0 do this */ printf("%d processors said hello!\n",numproc); } MPI_Finalize(); }

Compile

Intel

module load intel-psxe mpicc hello_mpi.c

OpenMPI

module load openmpi/4.1.6 mpicc hello_mpi.c

Example of Jobscript

slurmscript

#!/bin/bash #SBATCH --partition=defq # Partition (default is 'defq') #SBATCH --qos=normal # Quality of Service #SBATCH --job-name=helloC_MPI # Job Name #SBATCH --time=00:10:00 # WallTime #SBATCH --nodes=2 # Number of Nodes #SBATCH --ntasks-per-node=1 # Number of tasks (MPI processes) #SBATCH --cpus-per-task=1 # Number of processors per task OpenMP threads() #SBATCH --gres=mic:0 # Number of Co-Processors module load intel-psxe pwd echo "DIR=" $SLURM_SUBMIT_DIR echo "TASKS_PER_NODE=" $SLURM_TASKS_PER_NODE echo "NNODES=" $SLURM_NNODES echo "NTASKS" $SLURM_NTASKS echo "JOB_CPUS_PER_NODE" $SLURM_JOB_CPUS_PER_NODE echo $SLURM_NODELIST mpirun ./a.out echo "End of Job"

Submit a job

sbatch slurmscript

Check the status of your jobs

squeue -u USERNAME

Attachments (1)

- HelloC_MPI_Flow.png (55.9 KB ) - added by 11 years ago.

Download all attachments as: .zip

Note:

See TracWiki

for help on using the wiki.